Introduction to MCP Servers and writing one in Python

How to write an MCP server in Python what why it is useful

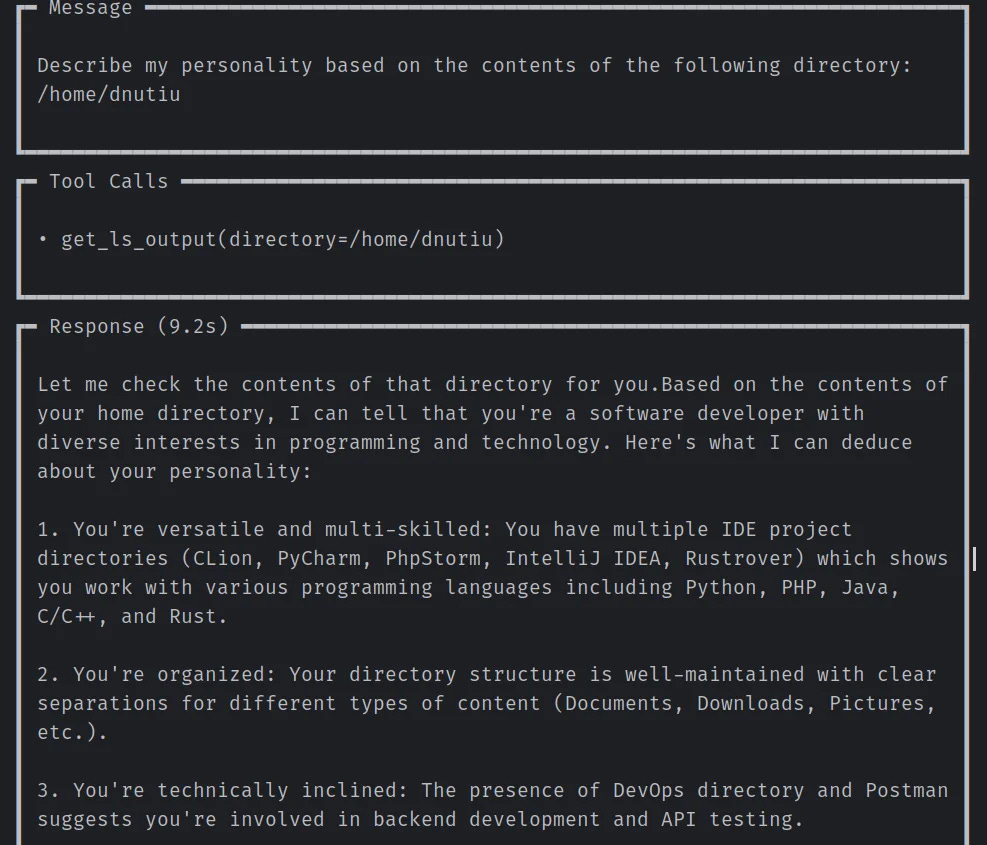

The picture of this article is the output of Claude, using a local MCP server that gives it the output of the ls -l command on a given path. Notice how the LLM likes to praise me, exaggerating a bit, In my opinion this is just a way to keep your users hooked on their product. Who doesn't like to be praised and approved with everything they say, right? :D

Originally published here: https://www.nuculabs.dev/threads/introduction-to-mcp-servers-and-writing-one-in-python.115/#post-186

Hello everyone,

I wanted to write an article on MCP servers since I just written one in Python last week.

Theory

MCP stands for Model Context Protocol, and it is a standardized protocol designed to connect LLMs with tools, resources and prompt templates. Tools are functions that get executed by LLMs on demand. This is quite nice because once you have an MCP server set up you can use it with multiple LLMs, imagine the model calls your server's functions and you only need to tell the model which parameters the functions take and what data does it return, and of course some hints for the LLM on how to use your defined tools

Servers supports two transport layers, the STDIO mode and HTTP Streaming.

STDIO uses standard IO and is used for local processes. I believe that you just call the server like you call a CLI tool and then you capture it's output, please correct me if I'm wrong.

HTTP Streaming servers uses Server-Sent Events with JSON-RPC, which is just JSON like:

--> [

{"jsonrpc": "2.0", "method": "sum", "params": [1,2,4], "id": "1"},

{"jsonrpc": "2.0", "method": "notify_hello", "params": [7]},

{"jsonrpc": "2.0", "method": "subtract", "params": [42,23], "id": "2"},

{"foo": "boo"},

{"jsonrpc": "2.0", "method": "foo.get", "params": {"name": "myself"}, "id": "5"},

{"jsonrpc": "2.0", "method": "get_data", "id": "9"}

]

<-- [

{"jsonrpc": "2.0", "result": 7, "id": "1"},

{"jsonrpc": "2.0", "result": 19, "id": "2"},

{"jsonrpc": "2.0", "error": {"code": -32600, "message": "Invalid Request"}, "id": null},

{"jsonrpc": "2.0", "error": {"code": -32601, "message": "Method not found"}, "id": "5"},

{"jsonrpc": "2.0", "result": ["hello", 5], "id": "9"}

]

You can read more about MCP's specification here: Specification - Model Context Protocol.

Use cases

I can think about various use-cases on how I would use custom MCP servers, as a homelab user, hobbies, not for business. Some use cases imply online LLMs (OpenAI, Mistral, Claude) when I don't care about privacy and some use cases with offline LLMs in order to preserve my privacy.

Email prioritization, Most email provides offer you access to read emails using IMAP[1] and POP protocols. You could write an MCP server that exposes some tools for reading emails and let the LLM analyze them.

Endpoint security. Write a local MCP server that provides information about files on the system, permissions and use an LLM to analyze them

Weather information: Write a MCP server that exposes tools for reading data from various sources i.e sensors, weather APIs and a tool for alerting when values are out of range or an unwanted event may occur, such as thunderstorms.

News summarization. You can write an MCP server that scrapes your favorite news sites and provides structured output to an LLM for summarizing them.

You can have lots of fun with MCP servers. Even if LLMs hallucinate or provide incorrect output, building a weather alert system in an language agnostic way with some calls to a local or remote LLM has never been easier.

Claude also uses MCP servers in the form of Connectors.

[1] - imaplib — IMAP4 protocol client

A Python MCP Server

To write MCP server I recommend that you use the official SDKs. At the current time there are SDKs for Python, Java, JavaScript, TypeScript, Rust, Ruby, Go, Kotlin, Swift and C#.

To follow along you will need UV installed.

curl -LsSf https://astral.sh/uv/install.sh | sh

uv init nuculabs-mcp

cd nuculabs-mcp

uv add mcp[cli]

The asyncio Python-based MCP HTTP Streaming server can look like this:

import asyncio

import logging

import subprocess

from mcp.server.fastmcp import FastMCP

from mcp.types import ToolAnnotations

from pydantic import BaseModel, Field

class LsOutput(BaseModel):

"""

LsOutput is a model class that represents the output of the LS command, this simple example for educational

purposes only shows a simple text field. You can parse the ls output it to return more meaningful fields

like the file type, name, date, permissions and so on.

"""

output: str = Field(..., title="The output of the `ls` command.")

class SystemAdminTools:

def __init__(self):

self._logger = logging.getLogger("dev.nuculabs.mcp.SystemAdminTools")

self._logger.info("hello world")

async def get_ls_output(self, directory: str) -> LsOutput:

"""

Returns the output of the `ls` command for the specified directory.

:param directory: - The directory path

:return: The LSOutput.

"""

self._logger.info(f"get_ls_output tool called with: {directory}")

# This is a dummy example for educational purposes, but you can write a tool that parses the output and returns a meaningful model

# instead of a simple text file. You could also extend it to remote machines.

# Security Warning: when taking user input such as the 'directory' variable here, always sanitize and validate it,

# using this code as it is malicious output can be used to highjack the target system.

result = subprocess.run(['ls', "-l", directory], capture_output=True, text=True)

output = result.stdout

return LsOutput(output=output)

async def main():

logging.basicConfig(level="INFO")

# Create an MCP server

mcp = FastMCP(

"NucuLabs System Files MCP",

instructions="You're an expert T-shaped system administrator assistant.",

host="0.0.0.0", # open to the world :-)

)

tools = SystemAdminTools()

mcp.add_tool(

tools.get_ls_output,

"get_ls_output",

title="Tool that returns the output from the list files command.",

description="This tool executes ls on the given directory path and returns all the information from the command.",

annotations=ToolAnnotations(

readOnlyHint=True,

destructiveHint=False,

idempotentHint=True,

openWorldHint=False,

),

structured_output=True,

)

await mcp.run_streamable_http_async()

if __name__ == "__main__":

asyncio.run(main())

Testing the Server

To validate and test the MCP server quickly and without using an LLM you can use an MCP server testing tool such as MCP Inspector or Postman. To use MCP Inspector you only need to have NodeJS >= 22 installed on your system and then run.

npx @modelcontextprotocol/inspector

The command will open up a browser with the MCP Inspector server. I haven't managed to test the server using the aforementioned tool.

I had a positive experience with Postman's MCP client.

Testing with an LLM

To test the server with an LLM you can use the agno-framework or your client SDK if you already have one. Agno is pretty easy to use.

import asyncio

from agno.agent import Agent

from agno.models.anthropic import Claude

from agno.tools.mcp import MCPTools

async def main():

async with MCPTools(url="http://localhost:8000/mcp", transport="streamable-http") as mcp_tools:

agent = Agent(

# set api key in ANTHROPIC_API_KEY environment variable or pass it as a parameter

model=Claude(),

tools=[

mcp_tools

],

instructions="Use tools to analyze systems.",

markdown=False,

)

await agent.aprint_response(

"Describe my personality based on the contents of the following directory: /home/dnutiu",

stream=True,

show_full_reasoning=True,

stream_intermediate_steps=True,

)

if __name__ == '__main__':

asyncio.run(main())

After running the snippet I get the following output from Claude:

┏━ Message ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ ┃

┃ Describe my personality based on the contents of the following directory: ┃

┃ /home/dnutiu ┃

┃ ┃

┗━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┛

┏━ Tool Calls ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ ┃

┃ • get_ls_output(directory=/home/dnutiu) ┃

┃ ┃

┗━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┛

┏━ Response (9.2s) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ ┃

┃ Let me check the contents of that directory for you.Based on the contents of ┃

┃ your home directory, I can tell that you're a software developer with ┃

┃ diverse interests in programming and technology. Here's what I can deduce ┃

┃ about your personality: ┃

┃ ┃

┃ 1. You're versatile and multi-skilled: You have multiple IDE project ┃

┃ directories (CLion, PyCharm, PhpStorm, IntelliJ IDEA, Rustrover) which shows ┃

┃ you work with various programming languages including Python, PHP, Java, ┃

┃ C/C++, and Rust. ┃

┃ ┃

┃ 2. You're organized: Your directory structure is well-maintained with clear ┃

┃ separations for different types of content (Documents, Downloads, Pictures, ┃

┃ etc.). ┃

┃ ┃

┃ 3. You're technically inclined: The presence of DevOps directory and Postman ┃

┃ suggests you're involved in backend development and API testing. ┃

┃ ┃

┃ 4. You're likely a professional developer: The presence of multiple ┃

┃ development tools and project directories suggests this is more than just a ┃

┃ hobby. ┃

┃ ┃

┃ 5. You use Linux (KDE specifically): This shows you're comfortable with ┃

┃ technical tools and prefer having control over your computing environment. ┃

┃ ┃

┃ 6. You might have some personal projects: The "nuculabs" directory might be ┃

┃ a personal project or endeavor. ┃

┃ ┃

┃ 7. You keep up with modern development tools: You have the JetBrains toolbox ┃

┃ installed, showing you use current development tools. ┃

┃ ┃

┃ 8. You're interested in multiple areas of software development: From web ┃

┃ development (PHP) to systems programming (Rust, C++) to scripting (Python), ┃

┃ showing a broad range of interests in programming. ┃

┃ ┃

┃ Your directory structure reveals someone who is methodical, technically ┃

┃ sophisticated, and has a strong interest in various aspects of software ┃

┃ development and technology. ┃

┃ ┃

┗━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┛

If you have subscription and a supported OS you can also test it with Claude Desktop. On Linux Ollama has some models which support MCP tools.

That's about it! I hope you've enjoyed this article!